Introduction

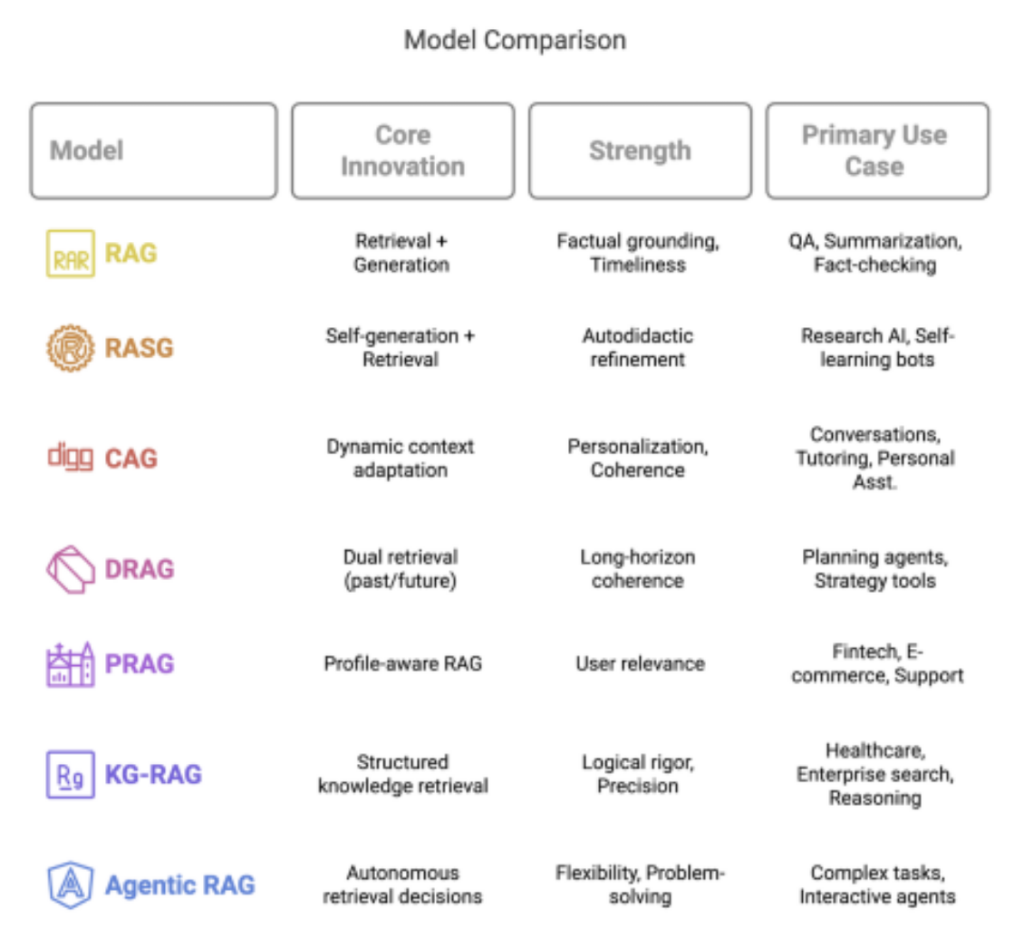

Standard large language models (LLMs) possess vast knowledge but struggle with limitations like hallucinations and accessing real-time information due to their static training data. This has spurred the development of dynamic AI architectures. Retrieval-Augmented Generation (RAG) has emerged as a key solution, integrating external knowledge into the generation process. However, the field is rapidly evolving beyond basic RAG. Newer models like RASG (Retrieval-Augmented Self-Generated learning), CAG (Context-Aware Generation), and various hybrids are enhancing AI’s ability to understand, learn, reason, and interact. This article explores this expanding landscape of augmented generation, covering their mechanisms, applications, challenges, and future potential.

Retrieval-Augmented Generation (RAG): Laying the Groundwork

RAG enhances LLMs by grounding their responses in external data.

The Core Mechanism:

- Retrieval: A user’s query is used to search an external knowledge base (often a vector database) for relevant information snippets.

- Augmentation: The retrieved context is combined with the original query.

- Generation: This augmented prompt is fed to the LLM, which generates a response informed by the retrieved facts.

Strengths and Limitations: RAG reduces hallucinations, improves factuality, and allows access to current information. However, its effectiveness depends heavily on retrieval quality. Poorly retrieved information leads to poor outputs. RAG also adds latency and requires careful management of the external knowledge base.

RASG: Retrieval-Augmented Self-Generated Learning – The Autodidactic AI

RASG introduces a self-supervised learning loop, enabling models to learn more autonomously. Instead of only reacting to user prompts, RASG models can self-initiate queries or hypotheses, retrieve relevant evidence, and then self-critique and refine their outputs or internal knowledge based on the alignment between generation and retrieval.

Core Features

- Autonomous Exploration: Proactively generates questions or content to explore topics

- Evidence-Based Refinement: Uses retrieved information to validate or correct its own generations

- Knowledge Improvement: Iteratively improves understanding through a generate-retrieve-critique cycle

Use Cases

- Scientific hypothesis generation and testing

- Automated content validation

- Training autonomous agents in dynamic environments

Challenges: Ensuring the quality of self-generated queries and the accuracy of the self-critique mechanism are crucial.

CAG: Context-Aware Generation – Towards Conversational Fluency

While RAG focuses on external data, CAG emphasizes the interaction context, leading to more personalized and coherent responses. CAG adapts generation based on conversational history (short-term memory); user profiles, preferences, and interaction history (long-term memory), and; emotional tone and discourse structure.

Techniques

- Memory Modules: Implementing mechanisms to store and retrieve relevant contextual information

- Dynamic Prompting: Adjusting LLM prompts based on context

- User Modeling: Building representations of user preferences and interaction styles

Use Cases

- Highly personalized chatbots and virtual assistants

- Adaptive intelligent tutoring systems

- Dynamic narrative generation in interactive media

Challenges: Managing long-term memory effectively, ensuring user privacy, and handling ambiguous context are key concerns.

Hybrid Models and Advanced Variants – The Expanding Toolkit

Combining RAG, RASG, and CAG principles yields specialized architectures.

- DRAG (Dual RAG): Retrieves based on both past context and anticipated future goals, useful for planning

- PRAG (Personalized RAG): Optimizes retrieval/generation using user proles for relevance in recommendations or support

- KG-RAG (Knowledge Graph RAG): Uses structured knowledge graphs for retrieval, enabling beer logical reasoning, especially in technical domains

- Agentic RAG: Empowers the LLM to decide when and how to use retrieval as part of a larger task or reasoning process

These models offer different strengths tailored to various needs.

Implementation Challenges & Considerations

Deploying augmented generation systems involves significant practical challenges:

- Data Management: Setting up, maintaining, and updating the retrieval corpus (chunking, embedding, indexing)

- Retrieval Quality: Ensuring relevance and minimizing noise in retrieved results

- Latency: Balancing retrieval speed with accuracy

- Cost: Managing the computational expense of embedding, retrieval, and generation

- Scalability: Handling large knowledge bases and high query loads

- Evaluation: Developing eective metrics for end-to-end system performance

Ethical Dimensions and Responsible AI

The power of these systems necessitates careful ethical consideration:

- Bias: Systems can inherit and amplify biases from their knowledge sources

- Privacy: Handling user data and sensitive information in retrieval sources requires robust security

- Transparency: Making the retrieval-generation process understandable is key for trust

- Misinformation: Systems can condently present incorrect information if the knowledge base is awed

- Over-Reliance: Users may uncritically accept AI outputs, reducing critical thinking

Future Trajectories: Towards Truly Intelligent Interaction

Augmented generation continues to evolve rapidly. Augmented generation techniques like RAG, RASG, CAG, and advanced hybrids are fundamentally reshaping AI. By grounding LLMs in external knowledge and context, these architectures mitigate key weaknesses of static models, paving the way for more accurate, reliable, adaptable, and trustworthy AI systems. While implementation and ethical challenges persist, the ongoing innovation in this space is critical for developing AI that can reason effectively, learn continuously, and interact intelligently, moving us closer to achieving human-aligned artificial intelligence.

References

- Google Cloud explains how Retrieval-Augmented Generation (RAG) combines information retrieval with generative AI to enhance response accuracy. https://cloud.google.com/use-cases/retrieval-augmented-generation?hl=en

- Shao et al. (2023) propose Iter-RetGen, an iterative retrieval-generation synergy to improve large language model performance. https://arxiv.org/abs/2305.15294

- Shi et al. (2024) introduce ERAGent, enhancing RAG models with improved accuracy, efficiency, and personalization. https://arxiv.org/abs/2405.06683

- Signity Solutions showcases 10 real-world applications of RAG across various industries, including healthcare and finance. https://www.signitysolutions.com/blog/real-world-examples-of-retrieval-augmented-generation

- Microsoft Cloud Blog outlines five key features and benefits of RAG, emphasizing its role in reducing AI hallucinations and enhancing user productivity. https://www.microsoft.com/en-us/microsoft-cloud/blog/2025/02/13/5-key-features-and-benefits-of-retrieval-augmented-generation-rag/

Disclaimer: The author is completely responsible for the content of this article. The opinions expressed are their own and do not represent IEEE’s position nor that of the Computer Society nor its Leadership.