Introduction

The rapid rise of artificial intelligence (AI), deepfakes, and automated decision-making systems has made it increasingly difficult for people to tell whether they are communicating with another human being, an AI, or a blend of both. This poses serious questions about trust, security, and social cohesion, especially in high-impact domains like healthcare, finance, and media.

IEEE 3152—officially titled Standard for Transparent Human and Machine Agency Identification — addresses this growing concern by defining clear markers and guidelines for identifying who (or what) we’re interacting with. It builds on the principle that individuals should never be misled into thinking they are speaking to, or receiving content from, an entity whose nature is concealed. By offering structured audio, visual, and metadata-based “marks,” this standard lays the foundation for greater transparency and fosters trustful AI-human collaboration.

Overview of the Standard

Purpose and Scope

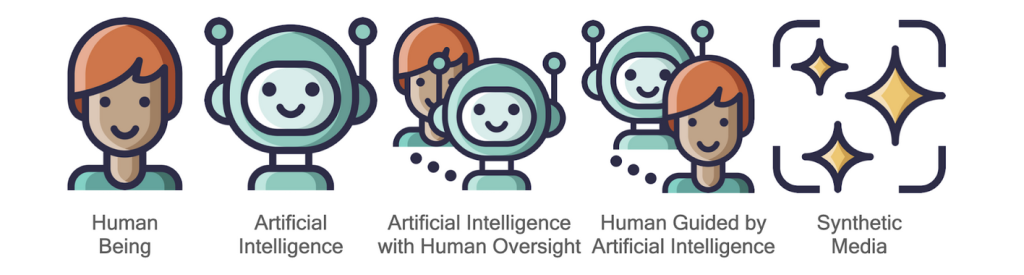

At its core, IEEE 3152 provides a transparent framework that ensures people can identify whether they are communicating with:

- A real human being (unfiltered or unaltered).

- An autonomous AI system.

- A hybrid entity operating under human oversight.

- A human whose actions are steered by AI.

- Media that has been significantly altered by AI (synthetic or deepfaked).

Instead of focusing on how an AI system makes decisions “behind the curtain,” the standard zeroes in on who or what is in control of content or communication. This clarity is critical for mitigating confusion and deception in online chats, phone calls, social media posts, videos, or any other platform.

Relevant Domains and Applications

IEEE 3152 can be implemented across a wide variety of use cases:

- Customer service: Marking call center support that is partially or fully AI-driven.

- Telehealth: Disclosing whether a health application or telepresence robot is under active human control.

- Media and entertainment: Labeling synthetic, AI-generated content such as deepfake videos.

- Finance: Enabling users to see whether financial advice is automated or human-led.

- Social media: Identifying AI-generated posts or accounts.

Key Features and Benefits

1. Clear Identification Marks:

- Visual icons (on-screen or physically displayed on a robot/device) that appear at the start of interactions.

- Audio disclaimers for phone or voice-only channels.

- Metadata “tags” enabling detection by automated tools and traceability across platforms.

2. Improved Trust and Safety:

- Helps prevent malicious impersonation attempts, such as deepfake scams or AI-driven fraud.

- Reduces the risk of emotional or psychological harm by clarifying whether a person or a machine is behind the conversation.

3. Reduced Regulatory and Legal Risks:

- Assists organizations in complying with emerging national and international AI disclosure regulations.

- Offers a consistent approach to labeling, easing the burden on businesses to create ad-hoc transparency solutions.

4. Enhanced Interoperability:

- The standard’s metadata model integrates with existing frameworks like EXIF, XMP, DID, or C2PA for content authenticity.

- Potentially complements other standards related to AI ethics, safety, and data governance.

5. Adaptability Across Industries:

- Flexible enough for consumer-facing systems and more complex, safety-critical environments (e.g., medical robotics or large-scale enterprise platforms).

Adoption and Impact

- Current Adopters: A growing number of AI-focused organizations and media outlets are experimenting with or piloting such “agency identification” marks, especially in high-risk contexts like telehealth or digital banking.

- Industrial and Societal Benefits: When people can confidently distinguish human- from machine-generated interactions, it fosters a more honest, dependable digital ecosystem. Misunderstandings and manipulations become harder to pull off.

- Potential for Widespread Application: As AI continues to surge in mainstream adoption, standards like IEEE 3152 can become an industry norm, helping to build broad-based trust in AI-enhanced products and services.

Future Developments

- Ongoing Revisions: With AI evolving rapidly, future iterations of IEEE 3152 will refine the definitions of “hybrid” systems and robust marking for next-generation immersive technologies (e.g., augmented reality).

- Interoperability with New Tools: Anticipated integrations with cryptographic watermarking, advanced “fingerprinting” of synthetic media, and new regulatory frameworks—like EU or national AI laws—will ensure the standard’s continued relevance.

- Working Groups:

Human or AI Interaction Transparency Working Group leads core development, with broad representation from academia, industry, and ethics communities.

C/AISC (IEEE Computer Society / Artificial Intelligence Standards Committee) orchestrates broader alignment with other relevant standards and liaises with IEEE’s global network to keep the standard up to date.

Conclusion

In an age when AI can seamlessly mimic human speech, facial expressions, and decision-making, IEEE 3152 is an essential beacon of transparency. By enabling us to identify the “who or what” behind an interaction, it bolsters trust in AI-driven communications and lays the groundwork for more ethical, responsible development of emerging technologies.

If you wish to explore IEEE 3152 or adopt it in your organization, visit the official IEEE Standards Store to learn more or purchase the published standard.

Disclaimer: The authors are completely responsible for the content of this article. The opinions expressed are their own and do not represent IEEE’s position nor that of the Computer Society nor its Leadership.